Git is a version control system that lets you manage and keep track of your source code history.

GitHub is a cloud-based hosting service that lets you manage Git repositories

GitLab is an open source code repository and collaborative software development platform for large DevOps and DevSecOps projects

Note: Since both GitHub and GitLab are version control systems (VCS), it can be difficult to choose one of the two. The most significant difference between the two is that while GitHub is a collaboration platform that helps review and manage codes remotely, GitLab is majorly focused on DevOps and CI/CD.

How to download GIT client on windows and configure it

For Download Use below link :

https://git-scm.com/download/win

Once downloaded, follow the the setup wizard for installation

Once Installation is done, Open the GIT as below:

Initial thing to do before use: Configuation

Write the following command to aware the GIT who you are:

Your Name and Email as below in inverted commas

git config --global user.name "ibraraziz"

git config --global user.email "ee.ibrarziz.com"

For Infromation: GIT client support many of the Linux commands as well so i am going to make a directory in C drive as follows and going to initialize it so that GIT can be friend with intialized folder (GIT init command will make this folder as local GIT Repo)

cd /c (enter into drive c)

mkdir gitrepo (creates a folder with name gitrepo)

git init (initialize the folder as git repo folder)

Now for example you want to clone/copy a public repo from Github to your local/system

use the following command

cd gitrepo

git clone https://github.com/vmware-tanzu/veleroas follows:

this will fetch the public repo to your local system.

HOW TO PUSH TO LOCAL REPO TO PUBLIC REPO

You want to make some changes in you local repo and push it your public repo, it can be done using following commands

Pre-req:

You need to have a account of github as of mine below:

create a remote repo there like below:

On GIT add that remote repo path

git git remote add origin https://github.com/ibraraziz

Once remote repo origin added, you can push the local repo to that public repo as below

Goto the folder from which you want to push the file

cd localrepo( you want to push files placed in that local repo)

git add . (the . at the end will add all file)

git commit -m " My first commit" ( in inverted commas any messaage )

git push --set-upstream https://github.com/ibraraziz/newfromlocal master

Once that command is entered it will ask for the password for the github as below a POP-UP will appear

Enter the username and password and you will get the following message upon success.

GIT client shows as below

local repo is push to public repo as you can see from github as well as below

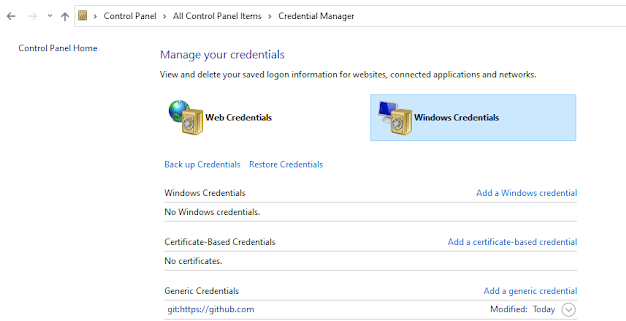

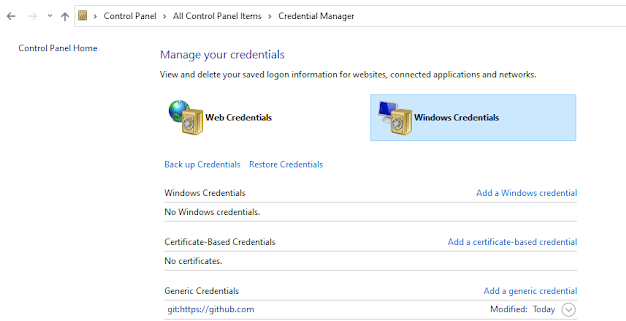

Informational Note: GIT stores the password on your computer on below path, this is useful when you want enter the new password or mistakenly wrong password is entered and want a re-pop up for password

Mostly used commands are as follows:

git config

Git init

git add

git commit

git pull

git push

git clone

git status

git log

git status

git remote

git merge

git branch

git checkout

By: Ibrar Aziz (Cloud Enthusiast)

Comments

Post a Comment