Jenkins is an open source continuous integration/continuous delivery and deployment (CI/CD) automation software DevOps tool written in the Java programming language. It is used to implement CI/CD workflows, called pipelines.

Devops:

DevOps is best explained as people working together to create, build and deliver secure software at the highest speed. DevOps practices enable software developers (devs) and operations (ops) teams to accelerate delivery through automation, collaboration, rapid feedback, and iterative improvement.

DevOps represents a change in mindset for IT culture. Based on agile, lean practices and systems theory, DevOps focuses on incremental development and rapid software delivery. Success depends on the ability to create a culture of accountability, better collaboration, empathy and shared responsibility for business results.

Process flow:

A developer develops an application

The developer submits the code to GitLab

A reviewer reviews the code and commits to the appropriate branch

Jenkins continuously monitors the relevant branch and starts building the code

Jenkins builds a container image, tags and sends the images to Docker Hub

Jenkins will start deploying the image to the kubernetes cluster

Kubernetes deploys the updated image using a rolling update strategy

Deployment on Kuberneres Cluster

1. Get into the repo :

https://github.com/ibraraziz/kubernetes-jenkins

2. Clone the repo from git client like

8. Access with with expose IP 10.50.49.28 and Port as 8080 ( It will differ with respect to your env )

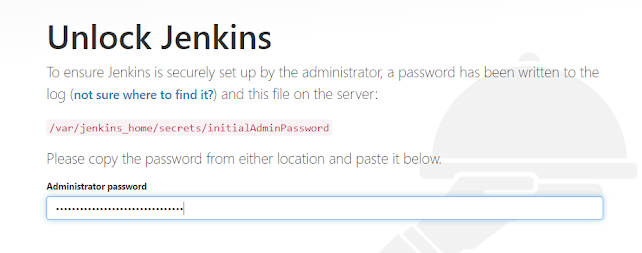

9. How to get the password is very simple

1. Enlist the jenkin pod

2. Enter into the pod and enlisht the path given in UI as above

3. Copy the password and paste into UI as below

Congratulations: Jenkins is Deployed and Accessible Now:

Comments

Post a Comment